mirror of https://github.com/grafana/loki

Tag:

Branch:

Tree:

Alex3k-patch-2

18060-enhance-log-level-detection-for-unstructured-logs

2.9.15RN

2.9.x-bump-golang

2005.12.08-limits

2023-03-16-new-query-limits

2025.08.04_metricsQuery

2025.12.02_troubleshooting-ingest

56quarters/vendor-updates

7139-json-properties-in-log-line-is-not-sorted

Alex3k-patch-1

Alex3k-patch-2

Alex3k-patch-3

Alex3k-patch-5

Alex3k-patch-6

JStickler-patch-1

RN-35

SE_16669

TR-query3

TR-query4

TR-query5

add-10055-to-release-notes

add-10193-to-release-notes

add-10213-to-release-notes

add-10281-to-release-notes

add-10417-to-release-notes

add-12403-to-release-notes

add-9063-to-release-notes

add-9484-to-release-notes

add-9568-to-release-notes

add-9704-to-release-notes

add-9857-to-release-notes

add-blooms-flag-to-index-lookup

add-bucket-name-to-objclient-metric

add-claude-github-actions-1755010590637

add-claude-github-actions-1755010669077

add-containerSecurityContext-to-statefulset-backend-sidecar

add-max-flushes-retries

add-page-count-to-dataobj-inspect

add-per-scope-limits

add-time-snap-middleware

add_metrics_namespace_setting

add_series_chunk_filter_test

add_vector_to_lokitool_tests

added-hints-to-try-explore-logs

adeverteuil-patch-1

aengusrooneygrafana-update-doc-pack-md

akhilanarayanan/dountilquorum

akhilanarayanan/query-escaping

akhilanarayanan/replace-do-with-dountilquorum2

alt-err-prop

andrewthomas92-patch-1

andrii/fix_default_value_for_sasl_auth

arrow-engine/stitch-store-and-engine

arve/remove_global_name_validation

ashwanth/remove-unordered-writes-config

ashwanth/restructure-query-section

ashwanth/skip-tsdb-load-on-err

attempt-count-streams-per-query

auto-remove-unhealthy-distributors

auto-triager

automated-helm-chart-update/2023-02-01-05-30-47

automated-helm-chart-update/2023-04-05-19-46-39

automated-helm-chart-update/2023-04-24-20-56-21

automated-helm-chart-update/2023-04-24-22-40-04

automated-helm-chart-update/2023-09-07-18-09-02

automated-helm-chart-update/2023-09-14-16-23-44

automated-helm-chart-update/2023-10-16-14-20-07

automated-helm-chart-update/2023-10-18-10-10-52

automated-helm-chart-update/2023-10-18-13-14-43

automated-helm-chart-update/2024-01-24-16-05-59

automated-helm-chart-update/2024-04-08-19-24-50

aws-bug

azuretaketwo

backport-10090-to-k160

backport-10101-to-release-2.9.x

backport-10221-to-release-2.8.x

backport-10318-to-k163

backport-10687-to-release-2.9.x

backport-11251-to-k175

backport-11827-to-k186

backport-13116-to-release-3.2.x

backport-13116-to-release-3.3.x

backport-13225-to-main

backport-14221-to-release-3.2.x

backport-14780-to-release-3.2.x

backport-15483-to-release-3.3.x

backport-16045-to-k239

backport-16203-to-k242

backport-16954-to-main

backport-17054-to-k249

backport-17129-to-k277

backport-18538-to-release-3.4.x

backport-8893-to-release-2.6.x

backport-8971-to-release-2.7.x

backport-9176-to-release-2.8.x

backport-9757-to-release-2.8.x

backport-9978-to-k158

backport-9978-to-k159

backport-b57d260dd

benclive/add-alternative-int64-encoder-dataobj

benclive/add-index-ptr-to-inspect

benclive/add-unique-parsed-keys-to-pointers

benclive/customize-client-params

benclive/dont-apply-limit-under-filter-exp

benclive/exp-query-comparator

benclive/fix-index-entries-for-single-tenant-objects

benclive/fix-some-data-races

benclive/hedge-requests-exp

benclive/implement-strict-logfmt-parsing-main

benclive/index-testing-wip

benclive/multi-tenant-toc

benclive/parquet-bench-experiment

benclive/query-fixes

benclive/respect-encoding-flags-compat

benclive/scan-kafka

benclive/serialize-stats-on-wire

benclive/sort-descriptions-before-return

benclive/sort-descriptors

benclive/test-fix-empty-matchers

benton/loki-mixin-updates

benton/loki-mixin-v2

better-batches

better-log-line-level-detection

blockbuilder-timespan

blockscheduler-track-commits

bound-parallelism-slicefor

buffered-kafka-reads

build-samples-based-on-num-chunks-size

callum-builder-basemap-lock

callum-explainer-hack

callum-hackathon-explainer

callum-iterator-arrow-record

callum-k136-jsonnet-fix

callum-lambda-promtail-test

callum-parallelize-first-last

callum-pipeline-sanitize-sm-values

callum-prob-step-eval

callum-quantile-inner-child

callum-query-limits-validation

callum-querylimit-pointers

callum-remove-epool

callum-ruler-local-warn

callum-s3-prefix-metric

callum-shard-last

callum-snappy-exp

callum-stream_limit-insights

callum-track-max-labels

chaudum/batch-log-enqueue-dequeue

chaudum/benchmark-reassign-queriers

chaudum/bloomfilter-e2e-parallel-requests

chaudum/bloomfilter-jsonnet

chaudum/bloomgateway-client-tracing

chaudum/bloomgateway-testing

chaudum/bloomstore-cache-test

chaudum/bloomstore-fetch-blocks

chaudum/bump-helm-4.4.3

chaudum/canary-actor

chaudum/chaudum/query-execution-pull-iterators

chaudum/chunk-compression-read-benchmark

chaudum/cleanup-ingester

chaudum/cmp-fix

chaudum/compactor-list-objects

chaudum/cri-config

chaudum/day-chunks-iter-test

chaudum/debug-compat

chaudum/debug-skipped

chaudum/distributor-healthcheck

chaudum/dockerfmt

chaudum/fix-flaky-multitenant-e2e-test

chaudum/fix-generate-benchmark-data-for-new-indexes

chaudum/fix-max-query-range-limit

chaudum/fix-predicate-from-matcher

chaudum/fixed-size-memory-ringbuffer

chaudum/generic-dataobj-section

chaudum/grpc-transport

chaudum/hackathon-analyze-pipelines

chaudum/hackathon-analyze-pipelines-v2

chaudum/hackathon-analyze-pipelines-v3

chaudum/hashwithoutlabels

chaudum/helm-remove-image-override-for-gel

chaudum/improve-git-fetch-makefile

chaudum/improve-timestamp-parsing

chaudum/index-gateway-instrumentation-k204

chaudum/instant-query-benchmarks

chaudum/integration-test-startup-timeout

chaudum/k204-index-gateway

chaudum/k259

chaudum/linked-map

chaudum/local-index-query

chaudum/logcli-load-multiple-schemaconfig

chaudum/loki-query-engine-ui

chaudum/make-bloomfilter-task-cancelable

chaudum/metastore-caching

chaudum/native-docker-builds

chaudum/new-engine-sharding

chaudum/page-cache

chaudum/physical-plan-optimizer-visitor-pattern

chaudum/querier-worker-cpu-affinity

chaudum/query-execution

chaudum/query-executor-4

chaudum/query-skip-factor

chaudum/remove-bigtable-backend

chaudum/remove-boltdb-backend

chaudum/remove-cassandra-backend

chaudum/remove-deprecated-storage-backends

chaudum/remove-dynamodb-backend

chaudum/rewrite-runtime-config

chaudum/run-query-engine-in-frontend

chaudum/seek-panic

chaudum/shard-by-section-k258

chaudum/shared-compressors

chaudum/sort-by-timestamp

chaudum/sort-dataobj-sections-by-timestamp-desc-parallelise

chaudum/syslog-udp-cleanup-idle-streams

chaudum/topk-popall

check-inverse-postings

cherrypick-9484-k151

chunk-inspect-read-corrupt

chunk-query

chunks-inspect-v4-read-corrupt

chunks_compaction_research

chunkv5

cle_updates

cleanup-campsite/removing-deprecations

cleanup-migrate

codeowners-mixins-20240925

context-cause-usage

correct-kafka-metric-names

correctly-propagates-ctx

crypto

crypto2

cursor/analyze-index-gateway-performance-changes-between-branches-4f9d

cursor/audit-license-change-commits-de87

cursor/compare-grafana-database-architectures-6434

cursor/compare-grafana-database-architectures-9478

cursor/compare-grafana-database-architectures-bc02

cursor/create-lightweight-loki-client-module-74c9

cursor/create-lightweight-loki-client-module-e1fe

cursor/extract-loki-client-utilities-to-new-module-2891

cursor/investigate-recent-api-response-changes-82fb

cursor/update-loki-opentelemetry-dependencies-efe4

custom-headers

dannykopping/groupcache-instrument

dannykopping/memcached-slab-allocator

dannykopping/remove-cache-stats

danstadler-pdx-patch-1

danstadler-pdx-patch-2

data-race-fix-01

dataobj

dataobj-compression-ratio-and-final-size

dataobj-comsumer-metastore-orig

dataobj-log-batches

dataobj-logs-sort

dataobj-logs-sortorder

dataobj-querier-logger

dataobj-reader-stats

dataobj-shard-debug

dataobj-store-sort-order

dataset-reader-fill-fail

debug-bloomgateway

dedup-only-partitions

del-ashwanth-custom

dependabot/go_modules/golang.org/x/crypto-0.45.0

dependabot/go_modules/operator/api/loki/golang.org/x/net-0.38.0

dependabot/go_modules/operator/golang.org/x/crypto-0.45.0

deprecatable-metrics-example

deps-update/main-alpine

deps-update/main-fluentfluent-bit

deps-update/main-github.comapachearrow-gov18

deps-update/main-github.comawsaws-sdk-go-v2config

deps-update/main-github.comawsaws-sdk-go-v2servicedynamodb

deps-update/main-github.comawsaws-sdk-go-v2services3

deps-update/main-github.combaidubcebce-sdk-go

deps-update/main-github.comgrafanalokiv3

deps-update/main-github.cominfluxdatatelegraf

deps-update/main-github.comparquet-goparquet-go

deps-update/main-github.compierreclz4v4

deps-update/main-go.opentelemetry.iocontribinstrumentationgoogle.golang.orggrpcotelgrpc

deps-update/main-go.opentelemetry.iocontribinstrumentationnethttphttptraceotelhttptrace

deps-update/main-go.opentelemetry.iocontribinstrumentationnethttpotelhttp

deps-update/main-go.opentelemetry.iootel

deps-update/main-go.opentelemetry.iootelsdk

deps-update/main-go.opentelemetry.iooteltrace

deps-update/main-golang.orgxcrypto

deps-update/main-golang.orgxnet

deps-update/main-golang.orgxtext

deps-update/main-google.golang.orgprotobuf

deps-update/main-hashicorpaws

deps-update/main-hashicorpgoogle

deps-update/main-https-github.comgrafanaloki.git

deps-update/main-rollout-operator

detected-labels-add-limits-param

detected-labels-from-store

detected-labels-minor-enhancements

dev-rel-workshop

dfinnegan-fgh-patch-1

digitalemil-patch-1

digitalemil-patch-2

digitalemil-patch-3

digitalemil-patch-4

dimitarvdimitrov-patch-1

dirlldown-config-endpoint__devenv

distributed-helm-chart

distributed-helm-demo

distributors-exp-avg

do-not-retry-enforced-labels-error

do-until-quorom-wip

doanbutar-patch-1

doanbutar-patch-2

docs-ipv6

docs-nvdh-log-queries

dodson/admonitions

dont-log-every-indexset-call-

dont-sync-old-tables

ej25a-patch-1

emit-events-without-debuggnig

enable-hedging-on-ingester-requests

enable-limitedpusherrorslogging-by-default

enable-stream-sharding

enforce-sharding-of-approx-topk-queries

engine-batchsize-metric

exceeds-rate-limit-check

executor-lazypipeline

explore-logs-fallback-query-path

faster-cleanupexpired

faster-truncate-log-lines

fastest-path-return

fcjack/image-workflows

fcjack/test-agent-lables

feat-multi-tenant-topics

feat/drain-format

feat/pattern-pattern-mining

feat/syslog-rfc3164-defaultyear

feat/usage-tracker

filter-node-pushdown

fix-2.8-references

fix-discarded-otlp-volume

fix-headers

fix-helm-bucket-issue

fix-helm-enterprise-values

fix-helmchart

fix-igw-job

fix-image-tag-script

fix-legacy-panels

fix-object-storage

fix-orphan-spans

fix-otlp-exporter-metric

fix-promtail-cves

fix-release-lib-shellcheck

fix-stream-generator-locks

fix/pattern-merge

fix_more_dashboards

fix_windowsserver_version

fmt-jsonnet-fix

force-loki-helm-publish

get-marked-for-deletions

gh-action-labeler-fix

gh-readonly-queue/main/pr-11793-215b5fd2fd71574e454529b1b620a295f1323dac

goldfish-rds-args-k279

goldfish-ui-improvements-2

goldfish-ui-k263

grafana-dylan-patch-1

grobinson/add-kafkav2

grobinson/fix-race-condition-in-tracker

grobinson/rate-metric-to-compare

grobinson/segmentation-key-demo

grobinson/track-consumption-lag-offsets

grobinson/use-local-distributor-rate-store-segmentation-keys

groupcache

gtk-grafana/drilldown-config-endpoint__devenv

guard-againts-non-scheduler-request

guard-ingester-detected-field-errors

hackathon-2023-08-events-in-graphite-proxy

hackathon/demo

hackathon/hackathon-2023-12-arrow-engine

handle-errors-per-category

hedge-index-gateway

hedge-index-gateway-220

helm-5.47.3

helm-5.48

helm-chart-tagged-6.20.0

helm-chart-tagged-6.26.0

helm-chart-tagged-6.27.0

helm-chart-tagged-6.28.0

helm-chart-tagged-6.30.0

helm-chart-tagged-6.31.0

helm-chart-tagged-6.32.0

helm-chart-tagged-6.47.0

helm-chart-weekly-6.24.0-weekly.233

helm-chart-weekly-6.25.0-weekly.234

helm-chart-weekly-6.25.0-weekly.235

helm-chart-weekly-6.25.0-weekly.236

helm-chart-weekly-6.25.0-weekly.237

helm-chart-weekly-6.26.0

helm-chart-weekly-6.26.0-weekly.238

helm-chart-weekly-6.26.0-weekly.239

helm-chart-weekly-6.26.0-weekly.240

helm-chart-weekly-6.26.0-weekly.241

helm-chart-weekly-6.28.0-weekly.242

helm-chart-weekly-6.28.0-weekly.243

helm-chart-weekly-6.28.0-weekly.244

helm-chart-weekly-6.29.0-weekly.245

helm-chart-weekly-6.29.0-weekly.246

helm-chart-weekly-6.29.0-weekly.247

helm-chart-weekly-6.30.0

helm-chart-weekly-6.47.0

helm-chart-weekly-6.50.0

helm-loki-values-backend-target

high-load-gateway

hot-fix-breaking-change

ignore-yaml-errors

improve-benchtest-dataset

improve-cleanup-stats

improve-distributor-latency

index-gateways/reduce-goroutines

index-stats

ingest-limits-reader-client-optimization

ingest-pipelines

inline-tsdb-on-cache

integrate-laser

intentional-failure

is-this-qfs-cure

ivkalita/metastore-distributed-plus-scheduler

ivkalita/metastore-merge-nodes

jdb/2022-10-enterprise-logs-content-reuse

jdb/2023-03-update-doc.mk

jdb/2025-05/add-docs-license

jsonnet-update/2023-01-31-10-09-02

k100

k101

k102

k103

k104

k105

k106

k107

k108

k109

k110

k111

k112

k113

k114

k115

k116

k117

k118

k119

k12

k120

k121

k122

k123

k124

k125

k126

k127

k128

k129

k13

k130

k131

k131-no-validate-matchers-labels

k132

k133

k135

k135-sharding-hotfix

k136

k137

k138

k139

k14

k140

k141

k142

k143

k144

k145

k146

k146-with-chunk-logging

k147

k148

k149

k15

k150

k150-merge-itr-fix

k151

k152

k153

k154

k155

k156

k157

k158

k159

k16

k160

k161

k162

k163

k164

k165

k166

k167

k168

k168-ewelch-concurrency-limits

k169

k17

k170

k171

k171-with-retry

k172

k173

k174

k174-fixes2

k175

k176

k177

k178

k179

k18

k180

k181

k182

k183

k183-quantile-patch

k184

k185

k185-fix-previous-tsdb

k186

k187

k188

k189

k19

k190

k191

k192

k193

k194

k195

k195-backup

k196

k197

k198

k199

k199-debug

k20

k200

k201

k202

k203

k203-with-samples

k204

k204-separate-download

k205

k205-with-samples

k206

k207

k207-ingester-profiling-2

k208

k209

k209-ewelch-idx-gateway-hedging

k21

k210

k210-ewelch-idx-gateway-hedge

k210-ewelch-shard-limited

k211

k211-ewelch-congestion-control

k211-ewelch-datasample

k211-ewelch-test-frontend-changes

k212

k213

k213-ewelch

k214

k215

k216

k217

k217-alloy-v1.7-fork

k217-without-promlog

k218

k219

k22

k220

k220-index-sync

k220-move-detected-fields-logic-to-qf

k220-with-detected-fields-guard

k221

k221-index-sync-fixes

k221-with-stream-logging

k222

k222-shard-volume-queries

k228

k229

k23

k230

k231

k232

k233

k234

k235

k236

k236-with-agg-metric-payload-fix

k237

k238

k239

k24

k240

k241

k242

k243

k244

k245

k246

k246-with-per-tenant-ruler-wal-replay

k247

k248

k248-distributor-lvl-detection

k248-level-detection-debugging

k248-levels-as-index

k249

k25

k250

k251

k252

k253

k254

k255

k256

k256-ewelch-distributor

k257

k257-ewelch-max-query-series

k258

k259

k26

k260

k261

k262

k262-limit-patterns-2

k262-limit-patterns-test

k263

k263-log-labels-string

k264

k265

k266

k267

k268

k269

k27

k270

k271

k271-pattern-volume

k272

k272-ewelch

k273

k274

k274-7a1d4f729e

k275

k276

k276-dataobj-sort

k277

k278

k278-ewelch

k278-goldfish-storage

k279

k28

k280

k281

k282

k283

k284

k284-ewelch

k284-metastore-improv

k285

k286

k29

k30

k31

k32

k33

k34

k35

k36

k37

k38

k39

k40

k41

k42

k43

k44

k45

k46

k47

k48

k49

k50

k51

k52

k53

k54

k55

k56

k57

k58

k59

k60

k61

k62

k63

k64

k65

k66

k67

k68

k69

k70

k71

k72

k73

k74

k75

k76

k77

k78

k79

k80

k81

k82

k83

k84

k85

k86

k87

k88

k89

k90

k91

k92

k93

k94

k95

k96

k97

k98

k99

kadjoudi-patch-1

kafka-usage-wip

kafka-wal-block

karsten/dedup-overlapping-chunks

karsten/first-over-time

karsten/fix-grpc-error

karsten/protos-query-request

karsten/test-ops

kaviraj/changelog-logql-bug

kaviraj/memcached-backup-tmp

kaviraj/single-gomod

kavirajk/backport-10319-release-2.9.x

kavirajk/bug-fix-memcached-multi-fetch

kavirajk/cache-instant-queries

kavirajk/cache-test

kavirajk/experiment-instant-query-bug

kavirajk/fix-engine-literalevaluator

kavirajk/linefilte-path-on-top-of-k196

kavirajk/memcache-cancellation-bug-fix

kavirajk/metadata-cache-with-k183

kavirajk/promtail-use-inotify

kavirajk/script-to-update-example

kavirajk/update-go-version-gomod

kavirajk/upgrade-prometheus-0.46

kavirajk/url-encode-aws-url

label-filter-predicate-pushdown

lambda-promtail-generic-s3

leizor/latest-produce-ts

leizor/rate-limiter-hack

leizor/rate-limiter-hack-clean

limit-patterns-k263

limit-streams-chunks-subquery

logcli-copy-chunks

logcli_object_store_failure_logging

logfmt-unwrap-queries

logql-correctness-memlimit

loki-bench-tool

loki-config-json

loki-mixin-parallel-read-path

loki-streaming-query-api

lru-symbols-cache

lru-symbols-cache-w-conn-limits

main

make-section-filter-tenant-aware

map-streams-to-ingestion-scope

marinnedea-patch-1

mdc-loki-helm

mdsgrafana-patch-1

meher/handle-wal-corruption-on-startup

meher/log-partition-ring-cache-map-size

meher/parition-ring-manual-disable

meher/query-lab

merge-pipeline-parallelism

mess-with-multiplegrpcconfigs

meta-monitoring-v2-p2

metadata-decoder-corrections

metastore-bootstrap

metastore-experiments

metric-query-testing

metricq-benchmark-delete-later

more-date-functions

more-details-tracing-for-distributors

more-release-testing

multi-zone-topology-support

new-index-spans

new-tests

no-extents-no-problem

operator-loki-v3

optimize-otlp-efficiency

otlp-severity-detection

owen-d/fix/nil-ptr-due-to-empty-resp

pablo/lambda-promtail-event-bridge-setup

pablo/promtail-wal-support

pablo/refactor-client-manager

pablo/refactor-http-targets

panic-if-builder-fails-to-init

panic_query_frontend_test

parallelise-merge-tables

parser-hints/bug

paul1r/ingester_startup

paul1r/poc_partition_inactive

paul1r/republish_lambda_promtail

periklis/k280-max-query-bytes-read

periklis/max-query-bytes-read

pooling-decode-buffers-dataobj

poyzannur/add-pdb-idx-gws

poyzannur/fix-blooms-checksum-bug

poyzannur/fix-compactor-starting-indexshipper-in-RW-mode

poyzannur/fix-errors-introduced-by-10748

poyzannur/fix-flaky-test

poyzans-query

pr-19328

pr-logfmt-1-foundation

pr-logfmt-2-planner

pr-logfmt-3-tokenizer

pr-logfmt-4-executor

pr_11086

pre-build-predicates

prepare-2.8-changelog

promtail-go-gelf

ptodev/reset-promtail-metrics-archive-23-april-2024

ptodev/update-win-eventlog

pub-sub-cancel

query-limits-validation

query-splitting-api

query-timestamp-validation

ratestore-standalone

rbrady/16330-fix-rolebinding-provisioner

read-corrupt-blocks

read-path-improvement-wal

reenable-ipv6-for-memberlist

refactor-extractors-multiple-samples-2

release-2.0.1

release-2.2

release-2.2.1

release-2.3

release-2.4

release-2.5.x

release-2.6.x

release-2.7.x

release-2.8.x

release-2.8.x-fix-failing-test

release-2.9.x

release-3.0.x

release-3.1.x

release-3.2.x

release-3.3.x

release-3.4.x

release-3.5.x

release-3.6.x

release-notes-appender

release-please--branches--add-major-release-workflow

release-please--branches--fix-vuln-scanning

release-please--branches--k195

release-please--branches--k196

release-please--branches--k197

release-please--branches--k198

release-please--branches--k199

release-please--branches--k200

release-please--branches--k201

release-please--branches--k202

release-please--branches--k203

release-please--branches--k204

release-please--branches--k205

release-please--branches--k206

release-please--branches--k208

release-please--branches--k209

release-please--branches--k210

release-please--branches--k211

release-please--branches--k212

release-please--branches--k215

release-please--branches--k216

release-please--branches--k221

release-please--branches--k222

release-please--branches--k228

release-please--branches--k234

release-please--branches--k235

release-please--branches--k236

release-please--branches--k237

release-please--branches--k238

release-please--branches--k239

release-please--branches--k240

release-please--branches--k241

release-please--branches--k242

release-please--branches--k243

release-please--branches--k244

release-please--branches--k246

release-please--branches--k247

release-please--branches--k249

release-please--branches--k250

release-please--branches--k251

release-please--branches--k253

release-please--branches--k254

release-please--branches--k255

release-please--branches--k256

release-please--branches--k257

release-please--branches--k260

release-please--branches--k261

release-please--branches--k262

release-please--branches--k263

release-please--branches--k264

release-please--branches--k265

release-please--branches--k266

release-please--branches--k267

release-please--branches--k268

release-please--branches--k270

release-please--branches--k271

release-please--branches--k272

release-please--branches--k273

release-please--branches--k278

release-please--branches--main

release-please--branches--main--components--operator

release-please--branches--release-2.9.x

release-please--branches--release-3.0.x

release-please--branches--release-3.1.x

release-please--branches--release-3.2.x

release-please--branches--release-3.3.x

release-please--branches--update-release-pipeline

remove-early-eof

remove-loki-ui

remove-override

remove_lokitool_binary

retry-limits-middleware

reuse-server-index

revert-7179-azure_service_principal_auth

revert-8662

revert-map-pooling

rfratto/protobuf-physical-plans

rgnvldr-patch-1

rk/update-helm-docs

salvacorts/2.9.12/fix-vulns

salvacorts/backport-3.4.x

salvacorts/compator-deletes-acache

salvacorts/debugging-forward-log-queries

salvacorts/log-small-results-cache

salvacorts/query-bucket-rate-limit

samu6851-patch-1

samu6851-patch-2

scheduler-assignlock-granular

scheduler_contentions

scope-usage

shantanu/add-to-release-notes

shantanu/dedupe-with-sm

shantanu/fix-scalar-timestamp

shantanu/otlp-push-optimizations

shantanu/otlp_dots_support

shantanu/prom-upgrade-and-fixes

shantanu/remove-ruler-configs

shantanu/utf8-queries-no-quotes

shard-parsing

shard-volume-queries

shipper/skip-notready-on-sync

simulate-retention-endpoint

singleflight

sjwlabelfmt

sjwnodeproto

sjwpoolmemory

skip-worktrees

snyk-monitor-workflow

sp/logged_trace_id

spiridonov-bifunc-alloc

spiridonov-blast-test

spiridonov-parity-hacks-1

spiridonov-style-chore

split-rules-into-more-groups

split-tests-by-package

split-with-header

steven_2_8_docs

stop-using-retry-flag

store-aggregated-metrics-in-loki

store-aggregated-metrics-in-loki-3

store-calls-stat

stream-limit-fixes

stripe-lock-ctx-cancelation

structured-metadata-indexing

structured-metadata-push-down-3

svennergr-patch-1

svennergr/structured-metadata-api

tch/bestBranchEvverrrrrrrrrr

temp-fluentbit-change

temp-proto-fix

test-docker-plugin-publish

test-failcheck

test-gateway

test-helm-release

test-query-limits-fixes

test-release

test-workflow

test_PR

test_branch

testing-drain-params

testing-drain-params-2

thor-ingestion

thor-query-parser-correctness

tpatterson/cache-json-label-values

tpatterson/chunk-iterator

tpatterson/expose-partition-ring

tpatterson/generate-drone-yaml

tpatterson/label-matcher-optimizations

tpatterson/reporder-filters

tpatterson/revert-async-store-change

tpatterson/size-based-compaction-with-latest

tpatterson/space-compaction

tpatterson/stats-estimate

trace-labels-in-distributor

transform_mixin

trevorwhitney/detect-only-no-parser

trevorwhitney/how-to-make-a-pr

trevorwhitney/index-stats-perf-improvement

trevorwhitney/logcli-client-test

trevorwhitney/refactor-nix-folder

trevorwhitney/respect-tsdb-version-in-compactor

trevorwhitney/series-volume-fix

trevorwhitney/structured-metadata-push-down

trevorwhitney/upgrade-dskit

trevorwhitney/use-tsdb-version-from-schema-config

trevorwhitney/volume-memory-fix-k160

trigger-ci

try-new-span-chagnes

try-reverting-pr9404

tsdb-benchmark-setup

tulmah-patch-1

twhitney/query-tee-enable-racing

twhitney/refactor-parse-2

twhitney/structured-metadata-push-down

twhitney/thor-unwrap-2

undelete

update-docs-Running-Promtail-on-AWS-EC2-tutorial

update-workflows

updateCHANGELOG

upgrade-golang-jwt-2.9

upgrade33

usage-poc-combined

use-worker-pool-for-kafka-push

use-worker-pool-kafka-push

use_constant_for_loki_prefix

use_go_120_6

validate-retention-api

wip-stringlabels

wrap-downloading-file-errors

wrapped-http-codes-propagate

x160-ewelch-cache

x161-ewelch-l2-cache

x162-ewelch-memcached-connect-timeout

xcap-coverage

xcap-pipeline-inject

yinkagr-patch-1

2.8.3

helm-loki-3.0.0

helm-loki-3.0.1

helm-loki-3.0.2

helm-loki-3.0.3

helm-loki-3.0.4

helm-loki-3.0.5

helm-loki-3.0.6

helm-loki-3.0.7

helm-loki-3.0.8

helm-loki-3.0.9

helm-loki-3.1.0

helm-loki-3.10.0

helm-loki-3.2.0

helm-loki-3.2.1

helm-loki-3.2.2

helm-loki-3.3.0

helm-loki-3.3.1

helm-loki-3.3.2

helm-loki-3.3.3

helm-loki-3.3.4

helm-loki-3.4.0

helm-loki-3.4.1

helm-loki-3.4.2

helm-loki-3.4.3

helm-loki-3.5.0

helm-loki-3.6.0

helm-loki-3.6.1

helm-loki-3.7.0

helm-loki-3.8.0

helm-loki-3.8.1

helm-loki-3.8.2

helm-loki-3.9.0

helm-loki-4.0.0

helm-loki-4.1.0

helm-loki-4.10.0

helm-loki-4.2.0

helm-loki-4.3.0

helm-loki-4.4.0

helm-loki-4.4.1

helm-loki-4.4.2

helm-loki-4.5.0

helm-loki-4.5.1

helm-loki-4.6.0

helm-loki-4.6.1

helm-loki-4.6.2

helm-loki-4.7.0

helm-loki-4.8.0

helm-loki-4.9.0

helm-loki-5.0.0

helm-loki-5.1.0

helm-loki-5.10.0

helm-loki-5.11.0

helm-loki-5.12.0

helm-loki-5.13.0

helm-loki-5.14.0

helm-loki-5.14.1

helm-loki-5.15.0

helm-loki-5.17.0

helm-loki-5.18.0

helm-loki-5.18.1

helm-loki-5.19.0

helm-loki-5.2.0

helm-loki-5.20.0

helm-loki-5.21.0

helm-loki-5.22.0

helm-loki-5.22.1

helm-loki-5.22.2

helm-loki-5.23.0

helm-loki-5.23.1

helm-loki-5.24.0

helm-loki-5.25.0

helm-loki-5.26.0

helm-loki-5.27.0

helm-loki-5.28.0

helm-loki-5.29.0

helm-loki-5.3.0

helm-loki-5.3.1

helm-loki-5.30.0

helm-loki-5.31.0

helm-loki-5.32.0

helm-loki-5.33.0

helm-loki-5.34.0

helm-loki-5.35.0

helm-loki-5.36.0

helm-loki-5.36.1

helm-loki-5.36.2

helm-loki-5.36.3

helm-loki-5.37.0

helm-loki-5.38.0

helm-loki-5.39.0

helm-loki-5.4.0

helm-loki-5.40.1

helm-loki-5.41.0

helm-loki-5.41.1

helm-loki-5.41.2

helm-loki-5.41.3

helm-loki-5.41.4

helm-loki-5.41.5

helm-loki-5.41.6

helm-loki-5.41.7

helm-loki-5.41.8

helm-loki-5.41.9-distributed

helm-loki-5.41.9-distributed-rc2

helm-loki-5.42.0

helm-loki-5.42.1

helm-loki-5.42.2

helm-loki-5.42.3

helm-loki-5.43.0

helm-loki-5.43.1

helm-loki-5.43.2

helm-loki-5.43.3

helm-loki-5.43.4

helm-loki-5.43.5

helm-loki-5.43.6

helm-loki-5.43.7

helm-loki-5.44.0

helm-loki-5.44.1

helm-loki-5.44.2

helm-loki-5.44.3

helm-loki-5.44.4

helm-loki-5.45.0

helm-loki-5.46.0

helm-loki-5.47.0

helm-loki-5.47.1

helm-loki-5.47.2

helm-loki-5.48.0

helm-loki-5.5.0

helm-loki-5.5.1

helm-loki-5.5.10

helm-loki-5.5.11

helm-loki-5.5.12

helm-loki-5.5.2

helm-loki-5.5.3

helm-loki-5.5.4

helm-loki-5.5.5

helm-loki-5.5.6

helm-loki-5.5.7

helm-loki-5.5.8

helm-loki-5.5.9

helm-loki-5.6.0

helm-loki-5.6.1

helm-loki-5.6.2

helm-loki-5.6.3

helm-loki-5.6.4

helm-loki-5.7.1

helm-loki-5.8.0

helm-loki-5.8.1

helm-loki-5.8.10

helm-loki-5.8.11

helm-loki-5.8.2

helm-loki-5.8.3

helm-loki-5.8.4

helm-loki-5.8.5

helm-loki-5.8.6

helm-loki-5.8.7

helm-loki-5.8.8

helm-loki-5.8.9

helm-loki-5.9.0

helm-loki-5.9.1

helm-loki-5.9.2

helm-loki-6.0.0

helm-loki-6.1.0

helm-loki-6.10.0

helm-loki-6.10.1

helm-loki-6.10.2

helm-loki-6.11.0

helm-loki-6.12.0

helm-loki-6.15.0

helm-loki-6.16.0

helm-loki-6.18.0

helm-loki-6.19.0

helm-loki-6.19.0-weekly.227

helm-loki-6.2.0

helm-loki-6.2.1

helm-loki-6.2.2

helm-loki-6.2.3

helm-loki-6.2.4

helm-loki-6.2.5

helm-loki-6.20.0

helm-loki-6.20.0-weekly.229

helm-loki-6.21.0

helm-loki-6.22.0

helm-loki-6.22.0-weekly.230

helm-loki-6.23.0

helm-loki-6.23.0-weekly.231

helm-loki-6.24.0

helm-loki-6.24.0-weekly.232

helm-loki-6.24.1

helm-loki-6.25.0

helm-loki-6.25.1

helm-loki-6.26.0

helm-loki-6.27.0

helm-loki-6.28.0

helm-loki-6.29.0

helm-loki-6.3.0

helm-loki-6.3.1

helm-loki-6.3.2

helm-loki-6.3.3

helm-loki-6.3.4

helm-loki-6.30.0

helm-loki-6.30.1

helm-loki-6.31.0

helm-loki-6.32.0

helm-loki-6.33.0

helm-loki-6.34.0

helm-loki-6.35.0

helm-loki-6.35.1

helm-loki-6.36.0

helm-loki-6.36.1

helm-loki-6.37.0

helm-loki-6.38.0

helm-loki-6.39.0

helm-loki-6.4.0

helm-loki-6.4.1

helm-loki-6.4.2

helm-loki-6.40.0

helm-loki-6.41.0

helm-loki-6.41.1

helm-loki-6.42.0

helm-loki-6.43.0

helm-loki-6.44.0

helm-loki-6.45.0

helm-loki-6.45.1

helm-loki-6.45.2

helm-loki-6.46.0

helm-loki-6.48.0

helm-loki-6.49.0

helm-loki-6.5.0

helm-loki-6.5.1

helm-loki-6.5.2

helm-loki-6.6.0

helm-loki-6.6.1

helm-loki-6.6.2

helm-loki-6.6.3

helm-loki-6.6.4

helm-loki-6.6.5

helm-loki-6.6.6

helm-loki-6.7.0

helm-loki-6.7.1

helm-loki-6.7.2

helm-loki-6.7.3

helm-loki-6.7.4

helm-loki-6.8.0

helm-loki-6.9.0

operator/v0.4.0

operator/v0.5.0

operator/v0.6.0

operator/v0.6.1

operator/v0.6.2

operator/v0.7.0

operator/v0.7.1

operator/v0.8.0

operator/v0.9.0

pkg/logql/syntax/v0.0.1

v0.1.0

v0.2.0

v0.3.0

v0.4.0

v1.0.0

v1.0.1

v1.0.2

v1.1.0

v1.2.0

v1.3.0

v1.4.0

v1.4.1

v1.5.0

v1.6.0

v1.6.1

v2.0.0

v2.0.1

v2.1.0

v2.2.0

v2.2.1

v2.3.0

v2.4.0

v2.4.1

v2.4.2

v2.5.0

v2.6.0

v2.6.1

v2.7.0

v2.7.1

v2.7.2

v2.7.3

v2.7.4

v2.7.5

v2.7.6

v2.7.7

v2.8.0

v2.8.1

v2.8.10

v2.8.11

v2.8.2

v2.8.3

v2.8.4

v2.8.5

v2.8.6

v2.8.7

v2.8.8

v2.8.9

v2.9.0

v2.9.1

v2.9.10

v2.9.11

v2.9.12

v2.9.13

v2.9.14

v2.9.15

v2.9.16

v2.9.17

v2.9.2

v2.9.3

v2.9.4

v2.9.5

v2.9.6

v2.9.7

v2.9.8

v2.9.9

v3.0.0

v3.0.1

v3.1.0

v3.1.1

v3.1.2

v3.2.0

v3.2.1

v3.2.2

v3.3.0

v3.3.1

v3.3.2

v3.3.3

v3.3.4

v3.4.0

v3.4.1

v3.4.2

v3.4.3

v3.4.4

v3.4.5

v3.4.6

v3.5.0

v3.5.1

v3.5.2

v3.5.3

v3.5.4

v3.5.5

v3.5.6

v3.5.7

v3.5.8

v3.5.9

v3.6.0

v3.6.1

v3.6.2

v3.6.3

${ noResults }

2012 Commits (Alex3k-patch-2)

| Author | SHA1 | Message | Date |

|---|---|---|---|

|

|

de2b0c6e9d

|

Rename per-request query limits. (#9011)

**What this PR does / why we need it**: `maxQueryTime` is more in line with the other per-request limits we have. **Checklist** - [ ] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [ ] Documentation added - [x] Tests updated - [ ] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` |

3 years ago |

|

|

cce3d3bed9

|

chore(format_query): Change response type to `application/json` (#9016)

|

3 years ago |

|

|

acb40ed40e

|

Eager stream merge (#8968)

This PR introduces a specialized heap based datastructure to merge

incoming log results in the frontend. Recently we've experienced an

increase in OOMs on frontends due to logs queries which match lots of

data. Sharded requests in loki split based on the amount of data we

expect and some queries see thousands of sub requests. For log queries,

we'll fetch up the `limit` from each shard, return them to the frontend,

and merge. High shard counts * limit log lines, especially combined with

large log lines (in byte terms) are accumulated on the frontend. Once

they all are received, the frontend merges them. This creates

opportunity for OOMs as it can hold up a lot of memory.

This PR addresses one of these problems by eagerly accumulating

responses as they're received and only retaining a total `limit` number

of entries. There's still OOM potential due to race conditions between

sub requests returning to the query-frontend and the query-frontend

merging other sub requests, but this definitely improves the situation.

I've been able to consistently run large limited queries that touch TBs

of data (i.e. `{cluster=~".+"} |= "a"`) that previously OOMed frontends.

---------

Signed-off-by: Owen Diehl <ow.diehl@gmail.com>

|

3 years ago |

|

|

2ae80d7166

|

Align common instance_addr with memberlist advertise_addr (#8959)

|

3 years ago |

|

|

62403350a5

|

remove redundant splitby middleware (#8996)

Found this double-copied line which a mistake. This PR removes one of them which won't change behavior (besides removing duplicate spans/etc). |

3 years ago |

|

|

1fcefe8efa

|

index-gateway: fix failure in initializing index gateway when boltdb-shipper is not being used (#8755)

**What this PR does / why we need it**: When only `tsdb` index is being used in the schema without configuring `boltdb-shipper` index store, we fail to initialize `index-gateway` since it also tries to build `boltdb-shipper` index client. This reference is used for serving `QueryIndex` calls which are for serving index from boltdb files. This PR fixes the issue by adding a check for the existence of `boltdb-shipper` in the schema config before initializing an index client. On the index gateway side, it adds a `failingIndexClient`, which errors when someone tries to query it. **Checklist** - [ ] `CHANGELOG.md` updated --------- Co-authored-by: Travis Patterson <masslessparticle@gmail.com> Co-authored-by: Dylan Guedes <djmgguedes@gmail.com> Co-authored-by: Travis Patterson <travis.patterson@grafana.com> |

3 years ago |

|

|

0f98b45d8d

|

Loki: Add UsageStatsURL to usagestats Config (#8779)

**What this PR does / why we need it**: Reports used to be sent to a fixed URL usageStatsURL. Now this url can be configurable while using the old url as a default. Updated tests to use config for custom URLs instead of changing package variable. **Which issue(s) this PR fixes**: Fixes #6502 **Special notes for your reviewer**: **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [x] Documentation added - [x] Tests updated - [ ] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` |

3 years ago |

|

|

b88aa08d89

|

Loki Canary: Enable insecure-skip-verify for all requests (#8869)

**What this PR does / why we need it**: This supports cases where the cert cannot be trusted but we are not using mTLS, i.e. `loki-canary -tls=true -insecure=true` **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [ ] Documentation added - [ ] Tests updated - [ ] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` |

3 years ago |

|

|

7264e0ef53

|

Loki: Remove global singleton of the tsdb.Store and keep it scoped to the storage.Store (#8928)

**What this PR does / why we need it**: I made this change to fix an issue with the cmd/migrate tool which I'm also sneaking a few fixes on in this PR too. We had a global singleton of the tsdb.Store which was leading to the migrate tool reading from and writing to the `source` storage.Store because it created the singleton with an object store client only pointing to the source store. This PR changes the scoping of that singleton to not be global but instead local to each instance of storage.Store **Which issue(s) this PR fixes**: Fixes #<issue number> **Special notes for your reviewer**: **Checklist** - [ ] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [ ] Documentation added - [ ] Tests updated - [ ] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` Signed-off-by: Edward Welch <edward.welch@grafana.com> |

3 years ago |

|

|

b892cade6a

|

Loki: Fixes incorrect query result when querying with start time == end time (#8979)

**What this PR does / why we need it**: In several places within Loki we need to determine if a query is a `range query` or `instant query`, this is done by checking to see if the start and end time are equal **and** the `step=0` The downstream handler was not checking for `step=0` and thus it incorrectly mapped a range query to an instant query when a query has a start time equal to and end time. There are a few other things at play here, mainly that we should really error anytime someone tries to run an instant query for logs which would have exposed this error much more easily. But that's something I'd like to handle in a different PR as it will be considered a breaking change depending on how we do it. This PR uses an existing function we have for testing the query type and addresses the issue found in #8885 **Which issue(s) this PR fixes**: Fixes #8885 **Special notes for your reviewer**: **Checklist** - [ ] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [ ] Documentation added - [ ] Tests updated - [x] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` --------- Signed-off-by: Edward Welch <edward.welch@grafana.com> |

3 years ago |

|

|

edc6b0bff7

|

Loki: Add a limit for the [range] value on range queries (#8343)

Signed-off-by: Edward Welch <edward.welch@grafana.com>

**What this PR does / why we need it**:

Loki does not currently split queries by time to a value smaller than

what's in the [range] of a range query.

Example

```

sum(rate({job="foo"}[2d]))

```

Imagine now this query being executed over a longer window of a few days

with a step of something like 30m.

Every step evaluation would query the last [2d] of data.

There are use cases where this is desired, specifically if you force the

step to match the value in the range, however what is more common is

someone accidentally uses `[$__range]` in here instead of

`[$__interval]` within Grafana and then sets the query time selector to

a large value like 7 days.

This PR adds a limit which will fail queries that set the [range] value

higher than the configured limit.

It's disabled by default.

In the future it may be possible for Loki to perform splits within the

[range] and remove the need for this limit, but until then this can be

an important safeguard in clusters with a lot of data.

**Which issue(s) this PR fixes**:

Fixes #8746

**Special notes for your reviewer**:

**Checklist**

- [ ] Reviewed the

[`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md)

guide (**required**)

- [ ] Documentation added

- [ ] Tests updated

- [ ] `CHANGELOG.md` updated

- [ ] Changes that require user attention or interaction to upgrade are

documented in `docs/sources/upgrading/_index.md`

---------

Signed-off-by: Edward Welch <edward.welch@grafana.com>

Co-authored-by: Karsten Jeschkies <karsten.jeschkies@grafana.com>

Co-authored-by: Vladyslav Diachenko <82767850+vlad-diachenko@users.noreply.github.com>

|

3 years ago |

|

|

69d6ee293e

|

tsdb: use unique uploader name while building tsdb index filename, same as boltdb-shipper (#8975)

**What this PR does / why we need it**: While building file names from `boltdb-shipper` and `tsdb`, we add the name of the uploader to it. To avoid collisions in filenames when running multiple ingesters with the same name, we also add a nanosecond precise timestamp to the ingester name, which is persistent as the uploader name in the active index directory. This is not being done for the tsdb index built from ingesters which can cause collisions in filenames when running multiple ingesters with the same name. I have moved the code to manage the uploader name to the index shipper config and used the same to share it with tsdb. |

3 years ago |

|

|

9159c1dac3

|

Loki: Improve spans usage (#8927)

**What this PR does / why we need it**: - At different places, inherit the span/spanlogger from the given context instead of instantiating a new one from scratch, which fix spans being orphaned on a read/write operation. - At different places, turn spans into events. Events are lighter than spans and by having fewer spans in the trace, trace visualization will be cleaner without losing any details. - Adds new spans/events to places that might be a bottleneck for our writes/reads. |

3 years ago |

|

|

de84737368

|

Fix fetched chunk from store size in metric (#8971)

**What this PR does / why we need it**: The `Size()` function on `chunk.Chunk` calls out to `func (ChunkRef) Size() int` in the proto, which doesn't actually include the size of the chunk data! We need to call `chunk.Data.Size()` for that. |

3 years ago |

|

|

e7b0d4d26e

|

feat(cos): Support authentication with trusted profiles (#8939)

**What this PR does / why we need it**: Add trusted profile authentication in COS client **Which issue(s) this PR fixes**: Fixes NA **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [x] Documentation added - [x] Tests updated - [x] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` --------- Co-authored-by: shahulsonhal <shahulsonhal@gmail.com> Co-authored-by: tareqmamari <tariq.mamari@de.ibm.com> |

3 years ago |

|

|

6bb8a071ca

|

Querier: block query by hash (#8953)

**What this PR does / why we need it**: Using the [query blocker](https://grafana.com/docs/loki/next/operations/blocking-queries/) can be unergonomic since queries can be long, require escaping, or hard to copy from logs. This change enables an operator to block queries by their hash. |

3 years ago |

|

|

1bcf683513

|

Expose optional label matcher for label values handler (#8824)

|

3 years ago |

|

|

28a7733ede

|

Rename config for enforcing a minimum number of label matchers (#8940)

**What this PR does / why we need it**: Followup PR for https://github.com/grafana/loki/pull/8918 renaming config. See https://github.com/grafana/loki/pull/8918/files#r1151820792. **Which issue(s) this PR fixes**: Fixes https://github.com/grafana/loki-private/issues/699 **Special notes for your reviewer**: **Checklist** - [ ] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [x] Documentation added - [ ] Tests updated - [ ] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` --------- Co-authored-by: Dylan Guedes <djmgguedes@gmail.com> |

3 years ago |

|

|

abc0fd26d2

|

Enforce per tenant queue size (#8947)

**What this PR does / why we need it**: Prior to the changes in https://github.com/grafana/loki/pull/8752 the max queue size per tenant was enforced by the size of the buffered channel to which a request was enqueued. However, since we have hierarchical queues, every sub-queue has the same channel capacity as the root (tenant) queue. Therefore the total queue size per tenant needs to be tracked separately, so requests can be rejected when the max queue size is reached. Signed-off-by: Christian Haudum <christian.haudum@gmail.com> |

3 years ago |

|

|

0adedfa689

|

Revert high cardinality metric in scheduler (#8946)

**What this PR does / why we need it**:

The metrics was useful for initial testing of the new scheduler queue implementation but yields high cardinality metrics, which is not desired. Also, the metric does not add additional value beyond the initial testing phase.

**Special notes for your reviewer**:

The metric was introduced with commit

|

3 years ago |

|

|

d421feafe6

|

Log when returning query-time limit (#8938)

**What this PR does / why we need it**:

At https://github.com/grafana/loki/pull/8727 we introduced various

limits that can now be configured at query time. We always compare the

value of the limit configured at query time with the value set on the

overrides for the tenant or the default if not configured (aka

original); applying the most restrictive one.

If the most restrictive is the original value or the limit is not

configured at query-time, we print the following debug message:

|

3 years ago |

|

|

ee045312a9

|

Automatically Reorder Pipeline Filters (#8914)

This PR moves Line Filters to be as early in a log pipeline as possible.

For example:

`{app="foo"} | logfmt |="some stuff"` becomes `{app="foo"} |="some

stuff" | logfmt`

Any LineFilter after a `LineFormat` stage will be moved to directly

after the nearest `LineFormat` stage

benchmarks:

```

goos: linux

goarch: amd64

pkg: github.com/grafana/loki/pkg/logql/syntax

cpu: 11th Gen Intel(R) Core(TM) i7-1165G7 @ 2.80GHz

│ reorder_old.txt │ reorder_new.txt │

│ sec/op │ sec/op vs base │

ReorderedPipeline-8 2104.5n ± 3% 173.9n ± 2% -91.74% (p=0.000 n=10)

│ reorder_old.txt │ reorder_new.txt │

│ B/op │ B/op vs base │

ReorderedPipeline-8 336.0 ± 0% 0.0 ± 0% -100.00% (p=0.000 n=10)

│ reorder_old.txt │ reorder_new.txt │

│ allocs/op │ allocs/op vs base │

ReorderedPipeline-8 16.00 ± 0% 0.00 ± 0% -100.00% (p=0.000 n=10)

```

|

3 years ago |

|

|

45775c82f7

|

Implement `RequiredNumberLabels` query limit (#8918)

**What this PR does / why we need it**: As pointed out in https://github.com/grafana/loki/pull/8851, some queries can impose a great workload on a cluster by selecting too many streams. Similarly to the `RequiredLabels` limit introduced at https://github.com/grafana/loki/pull/8851, here we add a new limit `RequiredNumberLabels` to require queries to specify at least N label. For example, if the limit is set to 2, then the query should contain at least 2 label matchers. This limit can be configured per tenant and at query time.  **Which issue(s) this PR fixes**: Fixes https://github.com/grafana/loki-private/issues/699 **Special notes for your reviewer**: **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [x] Documentation added - [x] Tests updated - [x] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` --------- Co-authored-by: Dylan Guedes <djmgguedes@gmail.com> |

3 years ago |

|

|

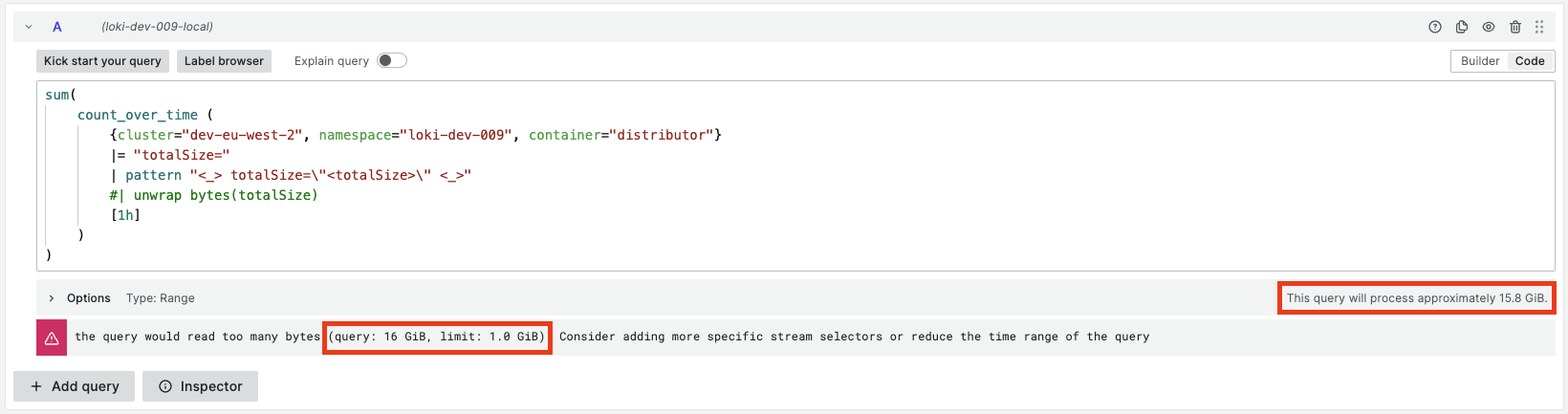

ee69f2bd37

|

Split index request in 24h intervals (#8909)

**What this PR does / why we need it**: At https://github.com/grafana/loki/pull/8670, we applied a time split of 24h intervals to all index stats requests to enforce the `max_query_bytes_read` and `max_querier_bytes_read` limits. When the limit is surpassed, the following message get's displayed:  As can be seen, the reported bytes read by the query are not the same as those reported by Grafana in the lower right corner of the query editor. This is because: 1. The index stats request for enforcing the limit is split in subqueries of 24h. The other index stats rquest is not time split. 2. When enforcing the limit, we are not displaying the bytes in powers of 2, but powers of 10 ([see here][2]). I.e. 1KB is 1000B vs 1KiB is 1024B. This PR adds the same logic to all index stats requests so we also time split by 24 intervals all requests that hit the Index Stats API endpoint. We also use powers of 2 instead of 10 on the message when enforcing `max_query_bytes_read` and `max_querier_bytes_read`.  Note that the library we use under the hoot to print the bytes rounds up and down to the nearest integer ([see][3]); that's why we see 16GiB compared to the 15.5GB in the Grafana query editor. **Which issue(s) this PR fixes**: Fixes https://github.com/grafana/loki/issues/8910 **Special notes for your reviewer**: - I refactored the`newQuerySizeLimiter` function and the rest of the _Tripperwares_ in `rountrip.go` to reuse the new IndexStatsTripperware. So we configure the split-by-time middleware only once. **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [x] Documentation added - [x] Tests updated - [x] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` [1]: https://grafana.com/docs/loki/latest/api/#index-stats [2]: https://github.com/grafana/loki/blob/main/pkg/querier/queryrange/limits.go#L367-L368 [3]: https://github.com/dustin/go-humanize/blob/master/bytes.go#L75-L78 |

3 years ago |

|

|

163fd9d8af

|

Short circuit parsing when label matchers are present (#8890)

This PR makes parsers aware of any downstream label-matcher stages at

parse time. As labels are parsed, if one has a matcher, the matcher is

checked at parse time. If the label does not match it's matcher, parsing

is halted on that log line.

**ex 1:**

consider the log:

`foo=1 bar=2 baz=3`

And the query

`{} | logfmt | bar=3`

When `bar` is parsed it is immediately checked against it's matcher. The

match fails so we the parser never spends time parsing the rest of the

line.

**ex 2:**

consider the log:

`foo=1 baz=3 bletch=4`

And the query

`{} | logfmt | bar=3`

`bar` is never seen in the log so the whole line is parsed.

**Benchmarks:**

```

│ parsers__old_2.txt │ parsers__new_3.txt │

│ sec/op │ sec/op vs base │

_Parser/json/inline_stages-8 3413.5n ± 5% 766.4n ± 4% -77.55% (p=0.000 n=10)

_Parser/jsonParser-not_json_line/inline_stages-8 101.5n ± 6% 103.1n ± 8% ~ (p=0.645 n=10)

_Parser/unpack/inline_stages-8 383.8n ± 4% 388.0n ± 9% ~ (p=0.954 n=10)

_Parser/unpack-not_json_line/inline_stages-8 13.30n ± 2% 13.11n ± 1% ~ (p=0.247 n=10)

_Parser/logfmt/inline_stages-8 2105.5n ± 16% 727.7n ± 4% -65.44% (p=0.000 n=10)

_Parser/regex_greedy/inline_stages-8 4.220µ ± 4% 4.175µ ± 4% ~ (p=0.739 n=10)

_Parser/regex_status_digits/inline_stages-8 319.8n ± 5% 326.4n ± 8% ~ (p=0.481 n=10)

_Parser/pattern/inline_stages-8 185.2n ± 7% 154.2n ± 3% -16.74% (p=0.000 n=10)

│ parsers__old_2.txt │ parsers__new_3.txt │

│ B/op │ B/op vs base │

_Parser/json/inline_stages-8 280.00 ± 0% 64.00 ± 0% -77.14% (p=0.000 n=10)

_Parser/jsonParser-not_json_line/inline_stages-8 16.00 ± 0% 16.00 ± 0% ~ (p=1.000 n=10)

_Parser/unpack/inline_stages-8 80.00 ± 0% 80.00 ± 0% ~ (p=1.000 n=10)

_Parser/unpack-not_json_line/inline_stages-8 0.000 ± 0% 0.000 ± 0% ~ (p=1.000 n=10)

_Parser/logfmt/inline_stages-8 336.00 ± 0% 74.00 ± 0% -77.98% (p=0.000 n=10)

_Parser/regex_greedy/inline_stages-8 193.0 ± 1% 192.0 ± 1% ~ (p=0.656 n=10)

_Parser/regex_status_digits/inline_stages-8 51.00 ± 0% 51.00 ± 0% ~ (p=1.000 n=10)

_Parser/pattern/inline_stages-8 35.000 ± 0% 3.000 ± 0% -91.43% (p=0.000 n=10)

│ parsers__old_2.txt │ parsers__new_3.txt │

│ allocs/op │ allocs/op vs base │

_Parser/json/inline_stages-8 18.000 ± 0% 4.000 ± 0% -77.78% (p=0.000 n=10)

_Parser/jsonParser-not_json_line/inline_stages-8 1.000 ± 0% 1.000 ± 0% ~ (p=1.000 n=10)

_Parser/unpack/inline_stages-8 4.000 ± 0% 4.000 ± 0% ~ (p=1.000 n=10)

_Parser/unpack-not_json_line/inline_stages-8 0.000 ± 0% 0.000 ± 0% ~ (p=1.000 n=10)

_Parser/logfmt/inline_stages-8 16.000 ± 0% 6.000 ± 0% -62.50% (p=0.000 n=10)

_Parser/regex_greedy/inline_stages-8 2.000 ± 0% 2.000 ± 0% ~ (p=1.000 n=10)

_Parser/regex_status_digits/inline_stages-8 2.000 ± 0% 2.000 ± 0% ~ (p=1.000 n=10)

_Parser/pattern/inline_stages-8 2.000 ± 0% 1.000 ± 0% -50.00% (p=0.000 n=10)

```

---------

Co-authored-by: Owen Diehl <ow.diehl@gmail.com>

|

3 years ago |

|

|

ffb961c439

|

feat(storage): add support for IBM cloud object storage as storage client (#8826)

**What this PR does / why we need it**: Add support for IBM cloud object storage as storage client **Which issue(s) this PR fixes**: Fixes NA **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [x] Documentation added - [x] Tests updated - [ ] `CHANGELOG.md` updated --------- Signed-off-by: Shahul <shahulsonhal@gmail.com> Co-authored-by: Aman Kumar Singh <amankrsingh2110@gmail.com> Co-authored-by: Suruthi-G-K <shruthi.suruthi@gmail.com> Co-authored-by: tareqmamari <tariq.mamari@de.ibm.com> Co-authored-by: shahulsonhal <shahulsonhal@gmail.com> Co-authored-by: Aditya C S <aditya.gnu@gmail.com> Co-authored-by: Tareq Al-Maamari <tariq.mamari@gmail.com> |

3 years ago |

|

|

336e08fc4b

|

Salvacorts/max querier size messaging (#8916)

**What this PR does / why we need it**: In https://github.com/grafana/loki/pull/8670 we introduced a new limit `max_querier_bytes_read`. When the limit was surpassed the following error message is printed: ``` query too large to execute on a single querier, either because parallelization is not enabled, the query is unshardable, or a shard query is too big to execute: (query: %s, limit: %s). Consider adding more specific stream selectors or reduce the time range of the query ``` As pointed out in [this comment][1], a user would have a hard time figuring out whether the cause was `parallelization is not enabled`, `the query is unshardable` or `a shard query is too big to execute`. This PR improves the error messaging for the `max_querier_bytes_read` limit to raise a different error for each of the causes above. **Which issue(s) this PR fixes**: Followup for https://github.com/grafana/loki/pull/8670 **Special notes for your reviewer**: **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [x] Documentation added - [x] Tests updated - [ ] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` [1]: https://github.com/grafana/loki/pull/8670#discussion_r1146008266 --------- Co-authored-by: Danny Kopping <danny.kopping@grafana.com> |

3 years ago |

|

|

44f1d8d7f6

|

azure: respect retry config before cancelling the context (#8732)

**What this PR does / why we need it**: On GET blob operations a context timeout is set to `RequestTimeout`, however this value is not the full timeout. This is only the first timeout and afterwards there are retries. As a result, the retry configuration is never used because the context is immediately cancelled. To fix we should set the context timeout to a value larger than the Azure timeout after retries. Since the backoff is exponential and randomized we need to take the value of `MaxRetryDelay` to set an upper bound. |

3 years ago |

|

|

c9d5a91206

|

Ruler: Implement consistent rule evaluation jitter (#8896)

**What this PR does / why we need it**: This PR replaces the previous random jitter with a consistent jitter. While both are random, having the random jitter be applied _consistently_ is essential for evaluating rules on a predictable cadence. If a rule is supposed to evaluate every minute, whether it evaluates at (e.g.) `01:00` or `01:03.234` is irrelevant because the evaluation _instant_ is not adjusted, so it will produce the same result whether run at `01:00` or `01:03.234`. However, if 1000 rules are set to evaluate at `01:00`, this will create a resource contention issue. |

3 years ago |

|

|

d38d481f35

|

Distributor: add detail to stream rates failure (#8900)

**What this PR does / why we need it**: Currently we cannot see if a single ingester is the source of the `unable to get stream rates` failures. This change adds the client address to the log entry. Signed-off-by: Danny Kopping <danny.kopping@grafana.com> |

3 years ago |

|

|

cba31024d4

|

Extend scheduler queue metrics with enqueue/dequeue counters (#8891)

**What this PR does / why we need it**: Better o11y of the scheduler. This change yields new metrics with potentially high cardinality on the scheduler. Signed-off-by: Christian Haudum <christian.haudum@gmail.com> |

3 years ago |

|

|

3344d59fb5 |

Extract scheduler queue metrics into separate field

This allows for easier passing of the metrics to the scheduler instantiation. Signed-off-by: Christian Haudum <christian.haudum@gmail.com> |

3 years ago |

|

|

793a689d1f

|

Iterators: re-implement mergeEntryIterator using loser.Tree for performance (#8637)

**What this PR does / why we need it**: Building on #8351, this re-implements `mergeEntryIterator` using `loser.Tree`; the benchmark says it goes much faster but uses a bit more memory (while building the tree). ``` name old time/op new time/op delta SortIterator/merge_sort-4 10.7ms ± 4% 2.9ms ± 2% -72.74% (p=0.008 n=5+5) name old alloc/op new alloc/op delta SortIterator/merge_sort-4 11.2kB ± 0% 21.7kB ± 0% +93.45% (p=0.008 n=5+5) name old allocs/op new allocs/op delta SortIterator/merge_sort-4 6.00 ± 0% 7.00 ± 0% +16.67% (p=0.008 n=5+5) ``` The implementation is very different: rather than relying on iterators supporting `Peek()`, `mergeEntryIterator` now pulls items into its buffer until it finds one with a different timestamp or stream, and always works off what is in the buffer. The comment `"[we] pop the ones whose common value occurs most often."` did not appear to match the previous implementation, and no attempt was made to match this comment. A `Push()` function was added to `loser.Tree` to support live-streaming. This works by finding or making an empty slot, then re-running the initialize function to find the new winner. A consequence is that the previous "winner" value is lost after calling `Push()`, and users must call `Next()` to see the next item. A couple of tests had to be amended to avoid assuming particular behaviour of the implementation; I recommend that reviewers consider these closely. **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - NA Documentation added - [x] Tests updated - NA `CHANGELOG.md` updated - NA Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` |

3 years ago |

|

|

3b57ad2a54

|

WAL: remove ePool that is unused (#8669)

**What this PR does / why we need it**: Nothing is ever fetched from the pool - `GetEntries()` is never called. And the loop to call `PutEntries()` follows a call where the slice it ranges over is reset to empty, so that loop never added anything to the pool. **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - NA Documentation added - NA Tests updated - NA `CHANGELOG.md` updated - NA Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` |

3 years ago |

|

|

3ed9f0c9ef

|

Canary: support filtering / parsing logs with LogQL (#8871)

**What this PR does / why we need it**: The canary logs can be shipped to Loki in various formats. This allows users of the canary to parse out the canaries logs from any format e.g. nested json. The alternative is to do something different for the canary logs to prevent the logs being wrapped / manipulated (e.g. write to Loki directly, or use a different log pipeline) which limits the benefits of the canary. I've confirmed this works locally. **Which issue(s) this PR fixes**: Fixes #7775 **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [ ] Documentation added - [ ] Tests updated - [ ] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` |

3 years ago |

|

|

4f94b89fdb

|

Loki: Add more spans to write path (#8888)

**What this PR does / why we need it**: Add new spans to our write path to better determine which operations are holding our write performance. |

3 years ago |

|

|

d24fe3e68b

|

Max bytes read limit (#8670)

**What this PR does / why we need it**: This PR implements two new per-tenant limits that are enforced on log and metric queries (both range and instant) when TSDB is used: - `max_query_bytes_read`: Refuse queries that would read more than the configured bytes here. Overall limit regardless of splitting/sharding. The goal is to refuse queries that would take too long. The default value of 0 disables this limit. - `max_querier_bytes_read`: Refuse queries in which any of their subqueries after splitting and sharding would read more than the configured bytes here. The goal is to avoid a querier from running a query that would load too much data in memory and can potentially get OOMed. The default value of 0 disables this limit. These new limits can be configured per tenant and per query (see https://github.com/grafana/loki/pull/8727). The bytes a query would read are estimated through TSDB's index stats. Even though they are not exact, they are good enough to have a rough estimation of whether a query is too big to run or not. For more details on this refer to this discussion in the PR: https://github.com/grafana/loki/pull/8670#discussion_r1124858508. Both limits are implemented in the frontend. Even though we considered implementing `max_querier_bytes_read` in the querier, this way, the limits for pre and post splitting/sharding queries are enforced close to each other on the same component. Moreover, this way we can reduce the number of index stats requests issued to the index gateways by reusing the stats gathered while sharding the query. With regard to how index stats requests are issued: - We parallelize index stats requests by splitting them into queries that span up to 24h since our indices are sharded by 24h periods. On top of that, this prevents a single index gateway from processing a single huge request like `{app=~".+"} for 30d`. - If sharding is enabled and the query is shardable, for `max_querier_bytes_read`, we re-use the stats requests issued by the sharding ware. Specifically, we look at the [bytesPerShard][1] to enforce this limit. Note that once we merge this PR and enable these limits, the load of index stats requests will increase substantially and we may discover bottlenecks in our index gateways and TSDB. After speaking with @owen-d, we think it should be fine as, if needed, we can scale up our index gateways and support caching index stats requests. Here's a demo of this working: <img width="1647" alt="image" src="https://user-images.githubusercontent.com/8354290/226918478-d4b6c2fd-de4d-478a-9c8b-e38fe148fa95.png"> <img width="1647" alt="image" src="https://user-images.githubusercontent.com/8354290/226918798-a71b1db8-ea68-4d00-933b-e5eb1524d240.png"> **Which issue(s) this PR fixes**: This PR addresses https://github.com/grafana/loki-private/issues/674. **Special notes for your reviewer**: - @jeschkies has reviewed the changes related to query-time limits. - I've done some refactoring in this PR: - Extracted logic to get stats for a set of matches into a new function [getStatsForMatchers][2]. - Extracted the _Handler_ interface implementation for [queryrangebase.roundTripper][3] into a new type [queryrangebase.roundTripperHandler][4]. This is used to create the handler that skips the rest of configured middlewares when sending an index stat quests ([example][5]). **Checklist** - [x] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [x] Documentation added - [x] Tests updated - [x] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` [1]: |

3 years ago |

|

|

94725e7908

|

Define `RequiredLabels` query limit. (#8851)

**What this PR does / why we need it**: Some end-users can impose great workload on a cluster by selecting too many streams in their queries. We should be able to limit them. Therefore we introduce a new limit `RequiredLabelMatchers` which list label names that must be included in the stream selectors. The implementation follows the same approach as for max query limit. **Which issue(s) this PR fixes**: Fixes #8745 **Checklist** - [ ] Reviewed the [`CONTRIBUTING.md`](https://github.com/grafana/loki/blob/main/CONTRIBUTING.md) guide (**required**) - [x] Documentation added - [x] Tests updated - [x] `CHANGELOG.md` updated - [ ] Changes that require user attention or interaction to upgrade are documented in `docs/sources/upgrading/_index.md` |

3 years ago |

|

|

4e893a0a88

|

tsdb: sample chunk info from tsdb index to limit the amount of chunkrefs we read from index (#8742)

**What this PR does / why we need it**:

Previously we used to read the info of all the chunks from the index and

then filter it out in a layer above within the tsdb code. This wastes a

lot of resources when there are too many chunks in the index, but we

just need a few of them based on the query range.

Before jumping into how and why I went with chunk sampling, here are

some points to consider:

* Chunks in the index are sorted by the start time of the chunk. Since

this does not tell us much about the end time of the chunks, we can only

skip chunks that start after the end time of the query, which still

would make us process lots of chunks when the query touches chunks that

are near the end of the table boundary.

* Data is written to tsdb with variable length encoding. This means we

can't skip/jump chunks since each chunk info might vary in the number of

bytes we write.

Here is how I have implemented the sampling approach:

* Chunks are sampled considering their end times from the index and

stored in memory.

* Here is how `chunkSample` is defined:

```

type chunkSample struct {

largestMaxt int64 // holds largest chunk end time we have seen so far. In other words all the earlier chunks have maxt <= largestMaxt

idx int // index of the chunk in the list which helps with determining position of sampled chunk

offset int // offset is relative to beginning chunk info block i.e after series labels info and chunk count etc

prevChunkMaxt int64 // chunk times are stored as deltas. This is used for calculating mint of sampled chunk

}

```

* When a query comes in, we will find `chunkSample`, which has the

largest "largestMaxt" that is less than the given query start time. In

other words, find a chunk sample which skips all/most of the chunks that

end before the query start time.

* Once we have found a chunk sample which skips all/most of the chunks

that end before the query start, we will sequentially go through chunks

and consider only the once that overlap with the query range. We will

stop processing chunks as soon as we see a chunk that starts after the

end time of the query since the chunks are sorted by start time.

* Sampling of chunks is done lazily for only the series that are

queried, so we do not waste any resources on sampling series that are

not queried.

* To avoid sampling too many chunks, I am sampling chunks at `1h` steps

i.e given a sampled chunk with chunk end time `t`, the next chunk would

be sampled with end time >= `t + 1h`. This means typically, we should

have ~28 chunks sampled for each series queried from each index file,

considering 2h default chunk length and chunks overlapping multiple

tables.

Here are the benchmark results showing the difference it makes:

```

benchmark old ns/op new ns/op delta

BenchmarkTSDBIndex_GetChunkRefs-10 12420741 4764309 -61.64%

BenchmarkTSDBIndex_GetChunkRefs-10 12412014 4794156 -61.37%

BenchmarkTSDBIndex_GetChunkRefs-10 12382716 4748571 -61.65%

BenchmarkTSDBIndex_GetChunkRefs-10 12391397 4691054 -62.14%

BenchmarkTSDBIndex_GetChunkRefs-10 12272200 5023567 -59.07%

benchmark old allocs new allocs delta

BenchmarkTSDBIndex_GetChunkRefs-10 345653 40 -99.99%

BenchmarkTSDBIndex_GetChunkRefs-10 345653 40 -99.99%

BenchmarkTSDBIndex_GetChunkRefs-10 345653 40 -99.99%

BenchmarkTSDBIndex_GetChunkRefs-10 345653 40 -99.99%

BenchmarkTSDBIndex_GetChunkRefs-10 345653 40 -99.99%

benchmark old bytes new bytes delta

BenchmarkTSDBIndex_GetChunkRefs-10 27286536 6398855 -76.55%

BenchmarkTSDBIndex_GetChunkRefs-10 27286571 6399276 -76.55%

BenchmarkTSDBIndex_GetChunkRefs-10 27286566 6400699 -76.54%

BenchmarkTSDBIndex_GetChunkRefs-10 27286561 6399158 -76.55%

BenchmarkTSDBIndex_GetChunkRefs-10 27286580 6399643 -76.55%

```

**Checklist**

- [x] Tests updated

|

3 years ago |

|

|

9844fad8b4

|

Rename LeafQueue to TreeQueue (#8856)

**What this PR does / why we need it**: `TreeQueue` is the semantically more correct term for this type of queue. Signed-off-by: Christian Haudum <christian.haudum@gmail.com> |

3 years ago |

|

|

9d20bed26a

|

Short circuit parsers (#8724)